On the inevitability of education for AI

Inevitably, AI will impact us

Evolutionary psychology provides us with an intriguing insight into why certain behaviors persist in human beings. For instance, obesity can be seen as a consequence of our evolutionary heritage. Humans evolved with a preference for calorie-rich foods, a useful adaptation in environments where food was scarce and access to it uncertain. This tendency, in a modern world where calorific foods are abundant and easily accessible, can lead to obesity.

Let's think, for a moment, about the last three decades of technological progress, and how communication networks and social media have transformed our behavior. According to evolutionary psychology, the human tendency to gossip has deep roots in our "caveman past". Originally, it was a key mechanism for cohesion and social order, as well as for sharing vital information about group members. With the advent of social media, this primitive gossiping instinct has been amplified and transformed. Social platforms not only facilitate the spread of rumors and unverified information but also create "echo chambers," where people are more prone to receive and believe information that reinforces their pre-existing beliefs. This can lead to the spread of misinformation, affecting not just personal relationships but also having a broader impact on society, including politics and public health.

Thus, it is fascinating (and, in a way, frightening) to consider the impact of artificial intelligence (AI) on our "caveman brains," shaped over thousands of years to adapt to a world very different from today's, a simpler and more direct environment. AI can serve as a tool to help us navigate and process the overabundance of information in the digital age, but it can also exacerbate some of our cognitive blind spots, such as our susceptibility to misinformation.

When an AI hallucinates, we can often identify and correct it, because it follows predictable patterns and its processes are, in a way, transparent and algorithm-based. In contrast, when humans hallucinate, when we have misconceptions, unfounded beliefs, or fall victim to misinformation, it is much more complicated to correct these errors. This is because our thought processes are deeply rooted, including our bias towards confirmation, our tendency to believe information that reinforces our pre-existing beliefs, and our difficulty in processing and accepting information that contradicts them.

Inevitably, some will use AI against us...

Any citizen can access and use AI. This democratization of access to AI implies that not only researchers and professionals can exploit its potential, but also organizations and individuals with less noble intentions. It's only a matter of time before these "bad actors" start using AI models for unethical or outright harmful purposes, such as creating large-scale misinformation, cyberbullying, or even devising strategies for social and political manipulation. We could fill pages with the impact of Facebook and Cambridge Analytica, or the "troll farms" in Russia. Many like to draw parallels between AI and armament technologies, but the widespread availability of AI contrasts sharply with military technology, traditionally restricted to the hands of governmental entities or organizations with large resources. They may seem like similar solutions, but they will have to have radically different solutions.

This reality poses a great challenge to today's society: how can we govern and regulate such a powerful yet accessible technology? Even though regulations, codes of good practice, and governance bodies are developed, there will always be limitations in their effectiveness. The rapid and constantly evolving nature of AI makes laws and regulations struggle to keep up. In addition, the anonymity and decentralized nature of the internet make it difficult to effectively enforce these regulations. This underscores the need for a new way of thinking about security and ethics in the AI era, where responsibility does not only lie with legislators but also with developers, users, and society as a whole to foster a culture of responsibility and awareness about the use and scope of this powerful technology.

...and legislators will not be there to save us

It is touching and heartwarming to hear and read so many talking heads on the other, arguably more civilized, side of the Atlantic discussing the urgent need for a legislative framework of AI. Make no mistake — we cannot rely on legislative solutions to prevent the misuse of AI, due to the dynamic nature of technological innovation. Laws, by their structure and approval process, tend to react to existing problems, rather than preventing new ones. When a new technology, like AI, advances rapidly, legislation, by its very deliberative nature, will be left behind, unable to address the new ethical, privacy, or security challenges this technology may pose. Moreover, AI, with its learning and adaptation capabilities, can generate unforeseen situations that were not contemplated in the current regulations. Therefore, even though legislation is a critical component in the governance of technology, it cannot be the only line of defense.

Protecting our civilization against harmful uses of AI can be seen under the light of the shift left concept in agile development, applied at a societal and governance level — move governance and AI regulation considerations to the early stages of its life cycle, similarly to how we would promote the integration of security and quality from the beginning of software development. This would mean that governance processes, including legislators and regulatory entities, should establish fundamental principles and ethical guidelines from the start, instead of just being "gatekeepers" of the market, reacting to problems after they have emerged. Legislators and governance bodies should function more as providers of core principles and ethical frameworks.

Instead of building barriers after the development and deployment of AI models, this approach promotes the creation of an environment where innovation and technological development can flourish within a pre-established framework of responsibility and ethics. This methodology would not only help prevent the misuse of AI but also enhance its use in ways that maximize benefits for society, ensuring that technology advances in a way that is sustainable, safe, and aligned with our most fundamental human values.

It's the education, stupid!

How can we prepare modern cave people for the next revolution? In the same way that we have overcome all the previous ones: education. We learn not to raid the fridge as if we wouldn't have to eat for the rest of the month. We learn to save and not spend all our money as if we had to live for 25 years. We learn not to gossip more than necessary and to have a critical spirit. Education is key to addressing and overcoming some of the maladaptations that arise from evolutionary psychology.

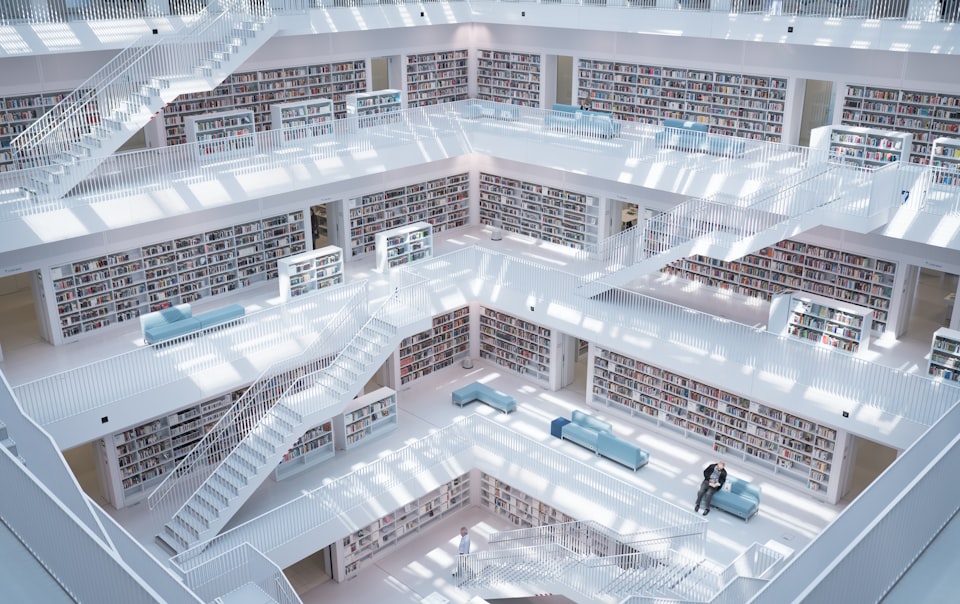

Here is where the public sector has a crucial role in ensuring the proper use of AI in society. One of the most powerful resources that all governments, in all countries, have at their disposal is control over a broad-reaching educational engine. Through focused and well-directed education, citizens can be prepared to understand, interact with, and responsibly use AI, as well as to recognize and combat its potential misuses.

This education should not be limited to teaching technical skills or understanding how AI works but should also include a strong component in digital ethics, critical thinking, and awareness of the effects of technology on society. This holistic approach would help mitigate the risks we can identify today, such as misinformation or social manipulation, as well as the risks we may identify in the future. The public sector can use its influence to ensure that all citizens are prepared to face the wave that is coming with the growing integration of AI into our daily lives.

Member discussion